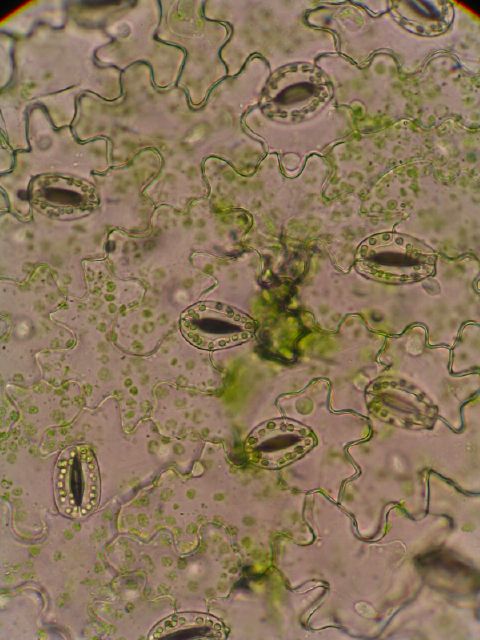

This is a close-up of the unstained dermal tissue from the underside of the leaf of Broad bean (Vicia fiba). Taken through the ocular of Olympus EX microscope, with 40x objective lens using Canon PowerShot A75, scaled down. Little donut shaped structures are a two guard cells (halves of the donut) surrounding a dark oval of stomatic pore (or stomata). Jiggsaw puzzle pieces are epidermal cells. Little “bubbles” of salad green are chlorophills, both in guard cells, and in the epidermal cells. Stomatic pore actually has a bunch of small groves, similar to fingerprints, but they were lost due to camera shake.

Category: General

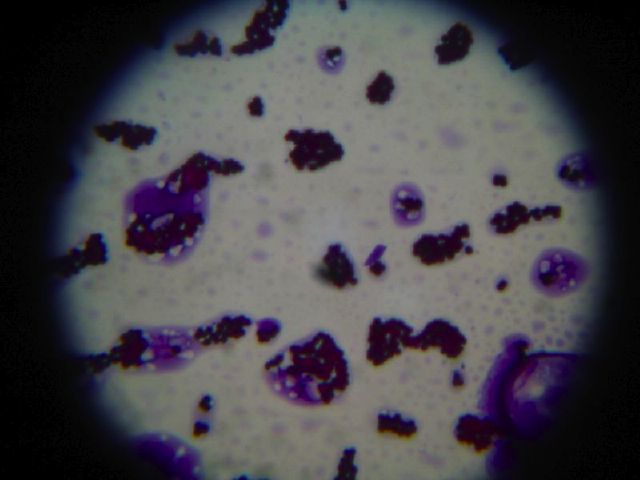

Photos of bacteria.

This is just an example of many photos I took in the lab of a Gram stained bacteria. 100x magnification, Leica microscope.

Part of the reason the focusing is not all that great is because I forgot to reset the diopter correction back to neutral (I am slightly near-sighted). Essentially, the idea is to take a camera (I used Canon PowerShot A75), turn off flash, and hold the camera lens right up to ocular of the microscope. You might (or might not) get better results from turning on macro mode.

Overall, not too bad, although this one was taken with exposure of a few seconds, so it shows some hand shaking too.

Boathouse on Rideau Canal

Boathouse on Rideau Canal.

I took this around 1 am on Saturday. Congress Center and a new building near Chateau Laurier is in the background.

Somehow this reminded me of my brief time in Amsterdam.

Farewell, National Capital Freenet

I’ve been a member of National Capital Freenet for about 11 years. For about a year prior to getting an account on Freenet, I were using vt320 terminals at Ottawa Public Library and were logging in as guest to read the newsfroops. At some point I got the parents and guardians involved, and they co-signed on my behalf, and I got my own freenet userid: cn119.

Freenet changed over years. Originally it was a primarily text based service, with ability to dial in for up to half an hour a day using PPP on 9600 modem, and connect to internet. Freeport software was the primary means of interaction with the system. It had a god awful e-mail interface, with pico to compose e-mails (nothing like introducing new users to bad unix habits, right? ) until Mark Mielke finally hacked together elm and duct-taped it onto FreePort. FreePort had a bunch of holes – San Mehat at one point showed me that one can drop to a real shell. I seem to recall that it was possible to trick early versions of lynx to execute a real shell on NCF as well, but this was 10 years ago, so my memory is hazy.

In any event, before I started at iStar, and had a real newsfeed (Thank you, John Henders@bogon/whimsey, wherever you are. Heck, thank you to all the folks at iStar NOC, and DIAL. Oh, and Jason “froggy” Blackey, for sure. And Tim Welsh. Jeff Libby. Steven Gallagher, whom I must have given plenty of white hairs. Most defintely GJ/Jennifer tag team. And Mike Storm. And farewell, Chris Portman), NCF was my primary way of reading alt.sysadmin.recovery. For a while, many moons ago cn119@freenet.carleton.seeay was my primary e-mail address.

I guess this is over by now.

Partying was somewhat bittersweet.

A few years ago NCF got swamped by spam. I remember having to delete ~700 spam messages a day. Some sort of mail filtering solution was implemented – I never really cared, as by that point I weren’t using NCF e-mail for anything, but spam stopped. A couple years later I’ve noticed that all mail kind of stopped too.

(In retrospect, to the best of my current understanding (and I really don’t care), a dedicated procmail system was implemented, and in turn was filtering to the POP3 accessable mail queue on a dedicated system, or somesuch. And, of course, I’ve never checked pop3 mail queue, and for years weren’t aware of it’s existance. Telnet, baby, telnet).

By ~2002 the only thing I were using NCF for were newsfroops, as I could read ott.forsale.computing alot more efficiently through a real newsreader then through a web interface.

I guess I should mention that NCF operates on donations. Every year one is expected to donate some money to keep NCF running. In order to do that, NCF accounts expire every year, and one has to go to Dunton tower at Carleton to renew them, and, hopefully give them some money. Every year I’d donate between 20 and 50$, and even after e-mail renewal notices stopped coming, for a couple of years I’d be on Carleton campus writing a final, and remember to stop by, and remind folks at NCF that I am still around and still care. One day I’ve asked if one has to donate in order to get one’s account renewed, but turned out that no, one can donate nil, and still keep on using Freenet. Hrm. Then why expire accounts, then? It’s so you would remember to donate.

This year I’ve forgot to renew my account.

So last Thursday I’ve logged in, only to see

cn119@134.117.136.48's password:

Last login: some date from some ip address.

Sun Microsystems Inc. SunOS 5.8 Generic Patch October 2001

-------------------------------------------------------------------------

This National Capital FreeNet | Le compte de cet usager du

user account has been archived. | Libertel de la Capitale Nationale

| a ete archive.

NCF Office / Bureau LCN : (613) 520-9001 office@freenet.carleton.ca

-------------------------------------------------------------------------

Connection to 134.117.136.48 closed.

That morning I were at work, in a dark basement with no cell phone coverage, and someone on the phone convinced that I care about the fact that his blueberry iMac’s power supply failed, and will find him a replacement power supply for cheap. Why do I always get cheap customers?

So the plan was to look for something similar on ott.forsale.computing, and failing that, order a replacement G4 chassis from CPUsed in Toronto. This is where the plan didn’t go as planned, as I couldn’t log in into NCF.

So, logically, one should ask someone at NCF to “unarchive” my account (in practice, change my login shell back to FreePort, as I know that my home directory is still there, as I can access http://freenet.carleton.ca/~cn119/ and see the same old junk that was there for the last 7 or 8 years.), and promise to stop by in person and give NCF more money.

I’ve called the above number, only to hear it ring, and be told that noone is around to answer my call, and I should call 520-5777. Oh, and I could leave voice mail.

So I’ve called 520-5777. It rang once, and then told me that noone is available, and that I should leave voice mail. I’ve hanged up, and called again in 5 minutes. Same result. After calling over and over in 5 – 10 minute intervals seven times, I’ve left voice mail. I identified myself, and the problem I were experiending. In it I’ve pointed out that I am unimpressed by lack of warning regarding account expiry, and unimpressed that I can’t talk to a human being about it. I mentioned that I am not sure that anyone will call me back, and that’s why I am not really happy with voice mail. I’ve pointed out that I don’t have coverage where I am, so they will have to leave voice mail when they call back. If they call back. I guess I were overly snarky in my message.

Around 4 pm I got out of the basement for a breath of fresh air. My cell phone chirped with “new message” message, and I learned that I have new voice mail. Voice mail was from Brian at NCF, and ran for over 7 minutes. In it Brian (or Ryan) was telling me how busy he is, how Freenet has over Eight Thousand members and by talking to me he is not talking to someone else on the phone, and how upset he is with me, etc. Main idea of the message was that I should come in person to Dunton Tower.

Frankly I weren’t impressed by this point. I were expecting one of “Your account is renewed, do stop by and remember to donate” or “Stop by Dunton tower, we will renew your account then”. Instead I got 7 minutes of telling me how busy someone is and how bad I am for taking Ryan (or Brian) away from answering phones, and how ungrateful I am for not donating so that Brian could be hired full time.

While listening to it, I had a WTF moment. Admittedly it wasn’t the first one of the day, as I get WTF moments at work all the time, but still….

So next day I’ve stopped by NCF offices at Carleton’s Dunton tower in person. I got to observe Brian in his natural habitat. Frankly, he reminded me of someone…. Of myself, about 10 years ago. Back when my ego was bigger, and was more easily bruised. Back when I thought that I am really hardcore, and everyone else is less so.

For about 10 minutes I’ve listened how Brian was talking to someone who sounded like a shut-in in search of human interaction, and tried to explain to him where to click. I hear conversations like this at work all the time – they are the bank breakers, as a technican spends a good hour or two hand-holding someone with no financial renumeration at all. Talking for an hour to someone, who has limited grasp of computing, and at the end telling him to see if he has a friend with some other ISP dial-up account, who will let him try his phone number and user id to see if the software will recognize a modem and will successfully negotiate PPP? Why not cut one’s losses, and talk to any of the other people in the call queue, and maybe actually help them?

Eventually Brian and I had a conversation. It didn’t go over too well. Brian was reluctant to do anything, however he repeatedly pointed out that he is not answering the phones while talking to me.

He pointed out that NCF serves over 8000 members. I’ve mentioned that I don’t find that all that impressive, because around 1997 they had 30000 active members, and seem to just be hemmoraging users over the last 10 years. I remember when ‘w’ on freeport would list pages and pages of logged in users, not about 20 users (10 of which would be xxnnn accounts, which are the accounts of freenet volunteers) that it shows now. In other words, FreeNet’s 8000 users is nothing. Cyberus has many more. iStar had about eighty thousand users when I worked for them.

Brian mentioned that the reason NCF doesn’t have a hold queue is because there is voice mail. He expanded upon it by saying that he is not the kind of person that would call department of transportation, but would go there in person. I wondered if he realized that the time that they spent talking to him in person they could have spent answering someone on the phone.

Brian also pointed out that NCF was the first ISP in Ottawa, if not in Canada. I am not too sure, and pointed out that resudox.net (I happened to know Steve Birnbaum, in another life) started in 1993 too, maybe even earlier. Brian snorted, and asked where resudox is now. Heck, if I’d have rent-free space, donated bandwidth, servers, phone lines, modem racks, etc., I’d also be around for years. Somehow noone else has such advantage, and thus actually have to make money somehow.

Ot was obvious that we weren’t seeing things eye to eye.

At that point I’ve asked him directly if he can renew my account, and he told me that matter will be refered to the executive director of NCF, John Selwyn, for review. Only he can renew my account.

I’ve called John, and left him voice mail (note a common theme in my dealings with NCF?) Yesterday I’ve stopped by offices 2018 and 2019 in Dunton Tower, to see if he might be in, and I could talk to him.

So far no answer.

I am not holding my breath.

Frankly, if all the complaints about lack of funding made by Brian are true, NCF loses more by losing yet another member, who was donating. I can use groups.google.com.

Farewell, NCF. It was a long ride, but I guess it’s over now.

In any event, I want to thank all folks who at one point made NCF great, and whom I more, or less knew.

Paul Tomblin, NCF’s newsadmin. I forgot by now what it is that Paul helped me out to with many many years ago, but the feeling of grattitude remains. But NCF news server works, and I am not upset that it doesn’t carry alt.binaries 😛

Ian! D. Allen. formerly technical director of NCF. I’ve interacted with him many a time at OCLUG meetings.

Mark Mielke, who, besides hacking NCF, also hacked LCInet at Lisgar. Lisgar was a melting pot of folks. I’ve met Sierra Bellows at Lisgar too. She is a step-daughter of Ian! D. Allen. Somewhere I have a CD with her singing from 1995 or so. Small world.

Roy Hooper, who gave up on running NCF, and instead ended up running Cyberus (and hiring me to run Cyberus instead), and, now, I’ve heard, runs CIRA. Roy used to be NCF’s sysadmin.

GJ Hagenaars, who also gave up on running NCF, and instead ended up running DIAL at iStar (and hired me “to write technical documentation. Part time.”). GJ was NCF’s Postmaster, and, coincidentially, is responsible for my hate of sendmail and love of exim.

Jennifer Witham, who was right, and in her “tough love” way very supportive. Jennifer, you were right, you hear. I were wrong. Oh, Jennifer was a volunteer of the month, back in 1997.

Pat Drummond, for always being helpful, and Chris Hawley, I guess also for being helpful. By now I forgot what it is that Chris Hawley did, and it might have been minor, like changing permissions on something in my ~, but it was a huge deal back then, and feeling of grattitude remains.

Thank you, folks.

Storage and power consumption costs

Lately I’ve been thinking more and more about storage. Specifically, at one point I’ve used a Promise 8 disk IDE to SCSI hardware RAID enclosure, attached to a Sun system, and formatted with UFS.

Hardware RAID 5 eliminated problems with losing data due to disk dieing in a fiery death. I bought 10 Maxtor 120 gig drives at the time, and dropped two on the shelf. Over course of about two and a half years I used two spare drives to replace the ones inside. Once it was a bad block, and the other time drive had issues spinning up. Solaris 8 had support for only one filesystem snapshot at a time, which was better then no snapshots at all, but not great. I’ve had a script in cron running once a week, that would snapshot whatever was there, and re-cycle each week. Not optimal, but it saved me some stress a couple of times, when it was late, I were tired, and put a space between wildcard and pattern in an rm command.

Last little while I’ve been trying to migrate to Mac OS X. Part of the reason was the cost of operation. I like big iron, and paying for an E4000 and an external storage array operating 24/7 was getting costly when one’s a student, as opposed to being a productive member of the workforce. I thought that it’s cheaper to leave an old G3 iBook running 24/7 – after all iBook itself only “eats” 65 watts, right? Generally I’ve turned off most of the other hardware – Cisco 3640 got replaced by a Linksys WRT54GS running openWRT, three other Sun systems got powered down, etc. At this point I’ve only had an iBook and an Ultra 2 running 24/7.

This is when I’ve hit the storage crunch: I were rapidly running out of disk space again, and I still needed occasional access to the data on the old Promise storage array.

Easy enough solution was to buy more external disk drives, place them in MacAlly USB2/FW external enclosures, and daisy chain them off the iBook. Somehow iBook ended up with over a TB of disk space daisy chained off it.

fiona:~ stany$ df -h Filesystem Size Used Avail Capacity Mounted on /dev/disk0s10 56G 55G 490M 99% / devfs 102K 102K 0B 100% /dev fdesc 1.0K 1.0K 0B 100% /dev512K 512K 0B 100% /.vol automount -nsl [330] 0B 0B 0B 100% /Network automount -fstab [356] 0B 0B 0B 100% /automount/Servers automount -static [356] 0B 0B 0B 100% /automount/static /dev/disk4s2 183G 180G -6.3G 104% /Volumes/Foo /dev/disk2s1 183G 182G -2.3G 101% /Volumes/Bar /dev/disk1s1 183G 183G -1.0G 101% /Volumes/Baz /dev/disk3s1 183G 174G -260.8M 100% /Volumes/Quux /dev/disk5s1 183G 183G -1.2G 101% /Volumes/Shmoo fiona:~ stany$

In process I’ve discovered how badly HFS+ sucks at a bunch of things – it will happily create filenames with UTF-8 characters, however it will not add things like accent grave or accent aigu to normal files. Migrating files with such filenames from UFS under Solaris ended up not simple – direct copying over NFS or SMB was failing, and untarring archives with such files was resulting in errors.

Eventually I’ve used a sick workaround of ext2fsx and formatted a couple of external 200 gig drives ext2. Ext2 under Mac OS blows chunks too – for starters it was not available for 10.4 for ages, and thus Fiona is still running 10.3.9 (Yes, I know that a very preliminary read only version of ext2fsx for 10.4 is now available. No, I don’t want to betatest it, and lose my data). ext2fsx would not support ext3, thus one doesn’t get any journalling. So if I accidentally pull on the firewire cable, and unplug the daisy chain of FW drives, I will have to fsck them all.

fscking ext2 under Mac OS is a dubious proposition at best, and most of the time fsck_ext2 will not generate an auto-mountable filesystem again. Solution to this was to keep a CD with Rock Linux PPC in the drive, and boot into linux to fsck.

I’ve cursed and set all the external drives to automount read-only, and manually re-mount read-write when I need to. Pain in the back side.

Lately I’ve been eyeing Solaris ZFS with some interest. Big stopping point for me was the migration of the volume to a different system (be that same OS and architecture, or different OS and architecture all together). Turned out that migrating between Solaris systems is as simple as zfs export volumename, move disks to different system, zfs import volumename, which is a big win. Recently there were rumors that Linux folks and Apple folks are porting or investigating porting of ZFS to Linux and Mac OS X (10.5?), which gives hopes to being able to migrate to a different platform if need be.

All of that made ZFS (and by extention Solaris 10) a big contender.

It didn’t help that each of the external drive power supplies is also rated at 0.7 amp. One watt is one ampere of current flowing at one volt, so here I am with 3.5 amps. 65 watts that Apple iBook power adapter is rated for is only about 2/3 of the actual amperage, as it also generates heat, so here is another 0.7 amp or more. Oh, and there is the old Ultra 2, that, according to Sun consumes another 350 W, and generates 683 BTU/hr. So, assuming that Sun actually means that it consumes 350 W, and not that the power supply is rated for 350 W, that’s another 3.2 Amps of load.

This adds up to ~7.5A/hr 24/7.

This is where I get really confused while reading Ottawa hydro bills.

Looking at Ottawa hydro rates page, I read:

Residential Customer Rates Electricity* • Consumption up to 600 kWh per month $0.0580/kWh • Consumption above the 600 kWh per month threshold $0.0670/kWh Delivery • Transmission $0.0095/kWh • Hydro Ottawa Delivery $0.0195/kWh • Hydro Ottawa Fixed Charge $7.88 per month Regulatory $0.0062/kWh** Debt Retirement $0.00694/kWh***

Thus, some basic math shows that:

7.5 amps * 110 volt = 825watts/hr

600kW/hr that Ottawa hydro is oh so generously offering me adds up to 600,000 watts / 31 days / 24 hours = ~806 watt/hr

In other words, I am using up the “cheap” allowance by just keeping two computers and 5 hard drives running.

825 watt/hr * 24 * 31 = 613.8kW/hr

Reading all the Ottawa Hydro debt retirement (read: mismanagement) bullshit, I get the numbers of

6.7 cents + 0.694 cents + 0.62 cents = 8.014 cents/kWh.

613.8kWh * 8.014 cents/kWh = 4919 cents = 49.19 CAD/month

Now, assuming that I were paying 5.8 as opposed to 6.7 cents kWh, it would still be 613.8kWh * 7.114 cents/kWh = 43.66 CAD/month.

Not a perty number, right?

So I am asking myself a question now…. What should I do?

I have two large sources of energy cosumption – external drives (I didn’t realize how much power they draw) and Ultra 2. iBook on it’s own consumes minimal power, and thus is at most about 10$/month to operate.

Option number one – turn off everything, save 50 bucks a month.

Option number two – leave everything running as is, swallow the “costs of doing business”

Option number three – Turn off Ultra 2, average savings of 22$/month, lose my e-mail archives (or migrate pine + e-mail to the iBook). Continue living with frustrations of HFS+.

Option number four – Migrate mail from Ultra 2 to iBook. Turn Ultra 2 off. Migrate all of the drives into the Promise enclosure (how much power it consumes I honestly don’t know until I borrow from somewhere a power meter – Promise is not listing any information, and neither is there any on the back of the thing), hook it up to iBook over RATOC SCSI to Firewire dongle. This will give me somewhere between 1.5 and 2 TB of storage, HFS+ or ext2 based. If I decide to install Linux or FreeBSD on iBook, well, the more the merrier.

Option number five – Migrate all of the drives into the Promise enclosure, hook it up to Ultra 2, turn off (or do not – on it’s own it’s fairely cheap to operate) remaining iBook. Power consumption will remain reasonably stable (I hope. I still have no idea how much power Promise thing consumes. It might be rated for 6.5 amps on it’s own). I could install latest OpenSolaris on Ultra 2, and format the array using ZFS. No costs savings, lots of work shuffling data around, but also has tons of fringe benefits, such as getting back up to date on the latest Solaris tricks.

I’ve just looked at specs for all the Sun system models that I own (Ultra 2, Ultra 10, Ultra 60 and E4K), and seems like U2 consumes the least power out of the bunch. Ultra 10 is rated for the same, but generates twice as much heat. Adopting Ultra 10 for SCSI operation is not that hard, but would force me to scrounge around for bits and pieces, and dual 300mhz US II is arguably better then a single 440mhz US IIi.

I guess there is also an option number 6 – Replace Ultra 2 with some sort of low power semi-embedded x86 system, with a PCI slot for a SCSI controller, and hook up Promise array to it. Install OpenSolaris, format ZFS, migrate data over. Same benefits as Option 5 with additional hardware costs, and having to use annoying computer architecture.

I guess I will have to decide soon.

Update: Promise UltraTrak100 TX8 is rated for 8 amps at 110 volts (4 amps at 220 volts)

April 26th, 1986, Pripyat, USSR, 1:25:58 AM local time

I’ve had an opportunity to dinner with a nuclear scientist on Saturday. He works as one of the technical safety managers (or somesuch) for Atomic Energy of Canada Limited at Chalk River in Deep River, Ontario.

We ended up talking about reactor safety, and I’ve mentioned a curious paragraph from RMBK-1000 (Реактор Большой МощноÑти Канальный) design document (it has some pictures for English speakers).

The manual says (and I translate):

Ðнергоблоки Ñ Ñ€ÐµÐ°ÐºÑ‚Ð¾Ñ€Ð°Ð¼Ð¸ РБМК ÑлектричеÑкой мощноÑтью 1000 МВт (РБМК-1000) находÑÑ‚ÑÑ Ð² ÑкÑплуатации на ЛенинградÑкой, КурÑкой, ЧернобыльÑкой ÐÐС, СмоленÑкой ÐÐС. Они зарекомендовали ÑÐµÐ±Ñ ÐºÐ°Ðº надежные и безопаÑные уÑтановки Ñ Ð²Ñ‹Ñокими технико-ÑкономичеÑкими показателÑми. ЕÑли их Ñпециально не взрывать.

Energy blocks with RBMK reactors with energy capacity of 1000 MWt (RBMK-1000) are in use in Leningrad, Kursk, Chernobyl, Smolensk nuclear power plants. They demonstrated themselves are reliable and safe devices with high technologically-enonomical characteristics. If they are not made critical on purpose.

I’ve cited that paragraph, and asked what was the opinion of the esteemed scientist on the last sentence. He told me that what the manual says is basically true – he is familiar with design and operation of RMBK-1000, was in a control room of Kurst nuclear power plant, and indeed, if you don’t make one go critical on purpose, they are perfectly safe, if not as energy efficient as pressurized water types.

I were refered to a publication titled Chernobyl – A Canadian Perspective, by Dr. Victor Snell, Director of Safety and Licensing at AECL, on the exact reasons why Chernobyl happened.

Personally, I am not interested in laying blame. Who is there to blame? One of the theories is that the operators were poorly trained or that they bypassed safety mechanisms on purpose (either due to poor training or due to political pressures). Maybe, however after reading a book (rus) on what really happened by former deputy operating engineer A.S. Dyatlov, I am not so sure. Another theory was a design flaw. Could be. Was it on purpose? I doubt it.

Inventor of RBMK style reactors, and chief theme leader was a full member of Academy of Sciences of USSR Professor A. P. Allexandrov (rus). Chief Designer of RBMK was Nikolai Dollezhal.

Nuclear power should not be feared. Most of the time, fear is there from lack of understanding. It should be respected, and proper safety procedures built into it. Decisions like this should not be made by politicans (“Build it faster, who cares about safety, prestige of the nation is at stake”), Bible (or Quaran) Thumpers (“For the glory of $DEITY, we will power our homes, and charge up our nukes, and rain fire and brimstone from above on anyone who disagrees with us!”), or hillbillies with loud voices (“Not in my backyard”). They should be made by folks who actually know what they are doing, and care about things like safety, and

Personally, I fully realize that there are things I know nothing about. I can play a scientist on interweb (because anyone can be anyone on interweb, and if you sound reasonably competent, most of the time people will think that you are an authority), but I should not be in charge of a nuclear plant design (or should run a government, etc). However, there are folks out there who actually dedicated their lives to nuclear energy.

If Patrick Moore one of Greenpeace original founer now thinks that nuclear is the way to go, maybe he actually knows what he is talking about. If Stewart Brand, original publisher of “Whole Earth Catalogue” thinks that folks should all read Rad Decision, maybe he is right.

So for a few moments, please remember all the folks who died while harnessing nuclear energy. Not only those who died from tyroid cancer in a Chernobyl aftermath, but Hiroshima and Nagasaki victims, even Marie Sadovski-Curie.

Some links to photos:

Special project of lenta.ru on 20th Annaversary of Chernobyl Nuclear Incident (with photos).

JTAG

Just some JTAG related randomness, considering that I’ve touched on it in previous post.

Linksys WRT54Gx

Linksys WRT54G and WRT54GS have JTAG headers on motherboard. It is possible to solder on the pins into the header on the motherboard, and build a Wiggler style cable to re-flash the bootloader, if you ended up hoseing it.

Personally I’ve done it on two WRT54GSes, and one of the main changes I’ve done was to set the boot_wait variable to on by default in the boot loader.

As Wiggler hooks up over parallel port, and thus is slow as sin, reflashing anything but the bootloader (ie flashing in actual firmware over JTAG) would take days.

CS6400

CS6400 had an Sbus based JTAG interface for hardware tests. CS6400 was a Cray Research Superservers Inc.’s Cray Superserver 6400. If I remember correctly, it had up to 8 XDBuses, and up to 64 either 75 or 85Mhz SuperSPARC II mBus based CPUs (same ones that could be used in Sun SPARCstations 10s and SparcStation 20s).

These were rather elusive beasts, and while I used to own a SPARCserver 1000 (12.5 amp/hr space heater, that sounded like a jet taking off), and there was SPARCserver 2000 (rackfull of 3-phase joy) that I played with, I’ve never touched a CS6400.

In fact, I suspect that few people at Sun even knew what one was – there were some parts schematics and option lists printed in the paper versions of Sun Field Engineer Handbooks, but as CS6400 was a product that Sun inherited when Sun bought Cray Research Superservers/Floating Point Systems from SGI. Sun used the Cray technology to build shiny things like Enterprise xx00 (Campfire) and Enterprise 10000 (Starfire) product lines.

JTAG was the primary way of hardware diagnostics on E10K, and was connected to System Service Processor (SSP, commonly an Ultra 5)

P.S. Sun, why did you block off wast majority of useful FEH content to subscribers only? On, and Sun, will you sue me if I dig out an older version of FEH that you guys used to ship on a CD, and make it available on the interweb?

I distinctly remember using JTAG to recover something else in the past, however my memory fails me. Might have been Nokia cell phone, might have been some sort of uPCI x86 system.

OpenBIOS

In my previous post I’ve waxed lyrical about IEEE 1275 standard, also known as Open Firmware.

Comrades in the field pointed me towards OpenBIOS project, a GPL licensed implentation of Open Firmware. Currently, it seems like it is almost useful by mere mortals, as long as mere mortals have supported motherboard, and want to boot up Linux 2.2 or newer on it.

Sadly, average consumer doesn’t look for a “JTAG header” on a feature list, while shopping for a new motherboard, nor is he particularly interested in a socketed BIOS flash chip (which also would allow one to re-flash BIOS). Average users (those mythical beasts) also probably don’t have the experise on hand on how to use JTAG if they flashed in a broken OpenBIOS image, and ended up up the creek without the paddle.

I’ll take Open Firmware (and Open BIOS) over EFI any time of the day, because EFI is essentially a black box controlled by Intel, and without vendor consent end user doesn’t really control his PC (for a close to home example see recent efforts to get Windows XP running on x86 based Macintosh systems, that ended up with Apple releasing EFI extention implementing PC BIOS compatibility as part of Boot Camp package). My fear is that hardware is essentially just another black box, and without vendor consent, implementing free BIOS replacement is nearly impossible. That is, supporting hardware ends up being a process of reverse engineering hardware, which is not simple, and which few people want to do for free.

I hate to sound like Richard Stallman, but with EFI locking one’s PC functionality away at the BIOS level, and with recent trend of OS kernels running only signed kernel modules (Microsoft claims that admin will still be able to load unsigned drivers in Vista, but anyone willing to bet that this functionality will get depreciated, and eventually removed?), are you really in control of your computer?

“Stany is adorkable and I love her”

So on a whim I did a technorati search on myself. this came up (and I were persistent, and searched for “stany” on that page).

While I resemble the fact that I am adorkable (except when low on sugar or coffeine), I do not resemble the fact that I am a her.

Googled for “stany”. Found one of my creations from way back when I actually hacked away on a Netwinder, on the first page.

Whew.

I guess wrt54gs is the Netwinder of the new millenium. Only it’s cheaper. And better supported. And has better network connectivity, but no hard drive (unless you bring out USB port, and use a USB key, or bring out GPIO channel, and use an MMC card reader).

I should e-mail ralphs, find out how he’s been. Does ralphs@n.o e-mail addy still work?

Netopia R-Series

Netopia R-Series routers

Sea-green boxes, eight 10bt ethernet ports on the back in hub configuration (with a switch for cross-over on port 1), space for two expantion daughterboards inside. Serial console port, one additional serial port for external modem. Netopia model line.

Depending on the NVRAM settings, can report itself as an R (router) or D (bridge) model. At one point one could call Netopia technical support, and over the phone recieve a free license key, that would convert an R model into a D model. Last time I’ve called them (Summer of 2003), they were reluctant to provide me with the license codes for two units I was converting to bridging mode.

Their argument was that the serial numbers I’ve provided (and the feature keys are bound by the serial number of the router, which, in turn is locked to it’s MAC) were “too old”, that is, sold to customer over 2 years ago.

Old serial numbers start with 72, new ones start with 80.

These might also correlate to the revision of the logic board inside – older logic boards have two slots for 72 pin RAM soldered on. Newer boards have the pads but no actual sockets.

Out of the box, Netopia unit reports that it has 1 meg of flash and 4 megs of RAM. I’ve attempted to plug in some 4, 8 and 16 meg 72 pin RAM sticks, but each time the router would not report anything over serial console, and eventually would blink all 8 green LEDs corresponding to the 10bt ports about once a second. This might have to do with the type of RAM (I’ve tried double sided RAM, pairs of 8 meg, and singles of 4, 8 and 16) I’ve attempted to use.

Box without any daughtercards inside reports itself as model 1300, so depending on the feature key, it can be either R1300 or D1300.

I’ve handled three types of these units, R7100, R7200 and R9100. The only difference is the daughtercard inside. Otherwise units are identical, with exception of the logic board revisions.

They support firewalling between the hub and the WAN interface, can serve DHCP, and can detect a WAN link failure. If an external modem is connected to the Auxillary serial port, Netopia can be configured to dial out and use PPP as fallback.

Technically they support 10 users, and if one wants more, one has to buy a feature key from Netopia. I’ve never hit the limit, so I am not sure if this is the number of MACs it remembers, or something else.

Technically there are pins on the motherboard where additional hardware can be mounted. Netopia sells a VPN accelerator for these, so it could be a separate encryption module that goes on the inside. VPN accelerator is called TER/XL VPN in Netopia lingo.

R7100 has a single Copper Mountain Networks SDSL daughter card on board. It can synchronise at up to 1.5 megabit to Copper Mountain Equipment. These are the most interesting daughter-cards, as they support back-to-back communication. One unit needs to be configured to set it’s clock source from the network, and the other one to generate a clock source internally, and then, units can be connected over copper pair. I’ve had success synchronizing at 768Kb/sec at a distance of 1.6km over a Bell Canada LDDS circuit (an unbalanced copper pair, primarily used for alarm circuits).

R7200 has a single ATM SDSL daughter card on board. Maximum speed is 2.3 megabit in Nokia Fixed Mode. These can be configured to communicate with various types of DSLAMs, including Nokia and Paradyne gear. I don’t believe that they support setting clock source internally, and thus they can’t be used for private interconnection without a DSLAM.

R9100 has a 10 megabit daughter card, that can be used for routing, or for ATM or IP encapsulation. I have one, but that’s about it. Supposedly these are used to talk to a DSL or cable modem, and do NAT, etc.

R3100 are the same chassis with ISDN daughtercard. Covad used to deploy those with customers. Never handled one myself. Supposedly daughtercards with U, UP and S/T exist.

R5100 are the same chassis with serial V.24/V.35.

R5300 are the same chassis with T1 WAN port. Again, never used myself.

Two daughter cards are installable in a single unit. Daughtercards are identical, and thus if you have two identical routers with a single daughtercard each, card can be removed from one, and added into the other. Model number reported by the firmware changes as a result, and the last two digits of the model number change from 00 to the corresponding daughtercard number.

So, R7272 would have two ATM SDSL daughtercards, D7171 would have two Copper Mountain SDSL daughtercards, etc.

Mixing and matching is possible, and firmware will report things accordingly. If daughtercard is installed in the second slot, first two digits of the model number remain as “13”.

Rxx20 – Has a V.90 analog daughtercard for fallback

Rxx31 – ISDN fallback (or in case of R3131, it would have 2 ISDN ports)

If two identical daughtercards are installed, one might require an IMUX feature key from Netopia. At the last check, Netopia wanted 150 USD for each key. IMUX feature key enables WAN interface bonding, thus, theoretically, if you have two D7171s, both with IMUX keys, and a 4 wire LDDS circuit running between two branch offices, you can bridge the two at 3 megabit. On R9191 and R7272 IMUX feature enables multi-link PPP over ATM as a form of bonding.

“Regular” firmware doesn’t support configuration of second WAN interface out of the box. I theorize that all that IMUX feature key does is it tells firmware to present menus for configuration of the second WAN interface.

Overall, Netopia units can be procured on eBay for ~20 USD a piece, and thus the costs of IMUX feature key are, in my opinion, unreasonable.

Currently latest firmware is 4.11, and Netopia has a list of changes between firmware versions.